- Published on

Unlocking Business Insights with AI, a Step-by-Step Tutorial on Conversing with Your Data

In today's data-driven business landscape, the ability to effectively analyze and derive insights from vast amounts of information has become a critical competitive advantage. Artificial Intelligence (AI) has emerged as a powerful tool to help businesses make sense of their data, enabling marketing and sales teams to uncover valuable insights, identify trends, and make informed decisions. In this tutorial, we will explore how to harness the power of AI to interact with your data, perform analysis, and generate creative solutions using the Crewai and Langchain Python libraries.

Benefits and Use Cases for Businesses

Before diving into the technical details, let's discuss the benefits and potential use cases of leveraging AI for data analysis in a business context:

- Enhanced Decision Making: By conversing with your data using natural language, you can quickly obtain answers to complex questions, enabling faster and more informed decision making.

- Uncovering Hidden Insights: AI-powered analysis can reveal patterns, correlations, and insights that might be overlooked by traditional methods, providing a deeper understanding of your business operations.

- Improved Customer Segmentation: AI can help identify customer segments based on behavior, preferences, and other factors, allowing for targeted marketing campaigns and personalized experiences.

- Sales Forecasting: By analyzing historical sales data and external factors, AI can generate accurate sales forecasts, helping businesses optimize inventory management and resource allocation.

- Competitive Analysis: AI can assist in monitoring competitor activities, identifying market trends, and uncovering opportunities for differentiation and growth.

Now, let's explore how to implement AI-powered data analysis using Crewai and Langchain Python libraries.

Setting Up the Environment

To get started, make sure you have Python installed on your system. We will be using the following libraries:

- Crewai: A library for creating AI agents and crews to perform tasks collaboratively.

- Langchain: A library that provides tools for interacting with databases and performing SQL queries.

You can install these libraries using pip:

pip install crewai[tools] langchain-core langchain-community langchain-groq langchain-experimental

Connecting to the Database

The first step is to connect to your database. In this example, we will be using an SQLite database stored in a file named my_database.db. You can replace this with your own database connection details.

from langchain_community.utilities.sql_database import SQLDatabase

database_file = r"path_to_db\my_database.db"

sql_database = SQLDatabase.from_uri(f"sqlite:///{database_file}")

Initiate the LLM

We will use Groq as our LLM and Langchain to initiate the LLM.

language_model = ChatGroq(

temperature=0,

model_name="llama3-70b-8192",

api_key="your_api_key"

)

Creating Tools

Next, we will create a set of tools that will be used by our AI agents to interact with the database and perform various tasks.

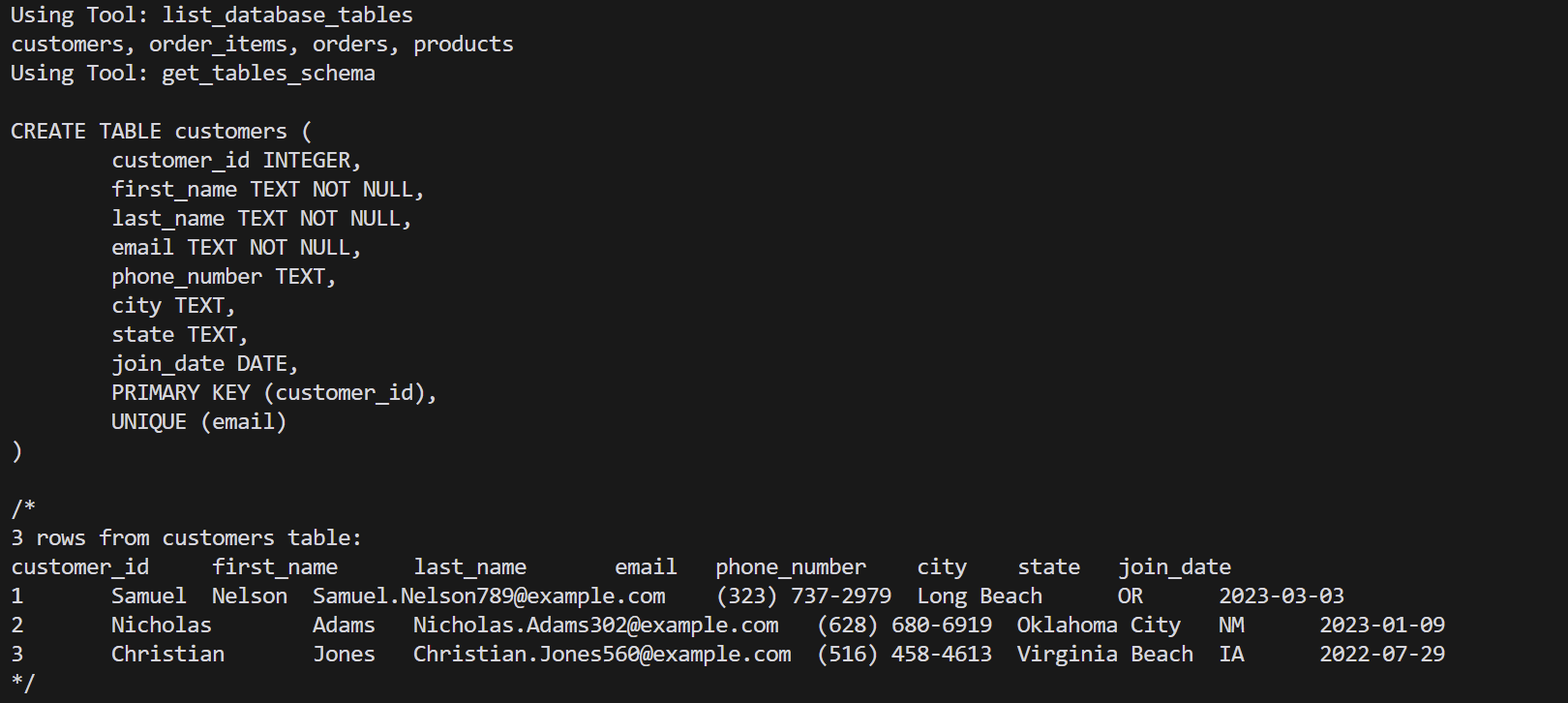

List Database Tables

The list_database_tables tool allows us to retrieve a list of available tables in the database.

@tool("list_database_tables")

def list_database_tables(config: Dict[str, Any] = {}) -> str:

"""List the available tables in the database"""

return ListSQLDatabaseTool(db=sql_database).run(config)

Get Tables Schema

The get_tables_schema tool retrieves the schema and sample rows for specified tables.

@tool("get_tables_schema")

def get_tables_schema(tables: str, config: Dict[str, Any] = {}) -> str:

"""

Input is a comma-separated list of tables, output is the schema and sample rows

for those tables. Be sure that the tables actually exist by calling `list_database_tables` first!

Example Input: table1, table2, table3

"""

schema_tool = InfoSQLDatabaseTool(db=sql_database)

return schema_tool.run({"table_names": tables, **config})

Execute SQL Query

The execute_sql_query tool allows us to execute SQL queries against the database and retrieve the results.

@tool("execute_sql_query")

def execute_sql_query(sql_query: str, config: Dict[str, Any] = {}) -> str:

"""Execute a SQL query against the database. Returns the result"""

return QuerySQLDataBaseTool(db=sql_database).run({"query": sql_query, **config})

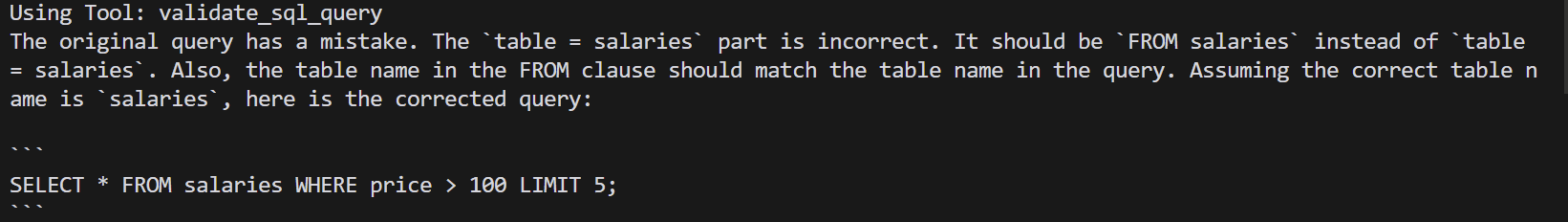

Validate SQL Query

The validate_sql_query tool checks the correctness of a SQL query before executing it.

@tool("validate_sql_query")

def validate_sql_query(sql_query: str, config: Dict[str, Any] = {}) -> str:

"""

Use this tool to double check if your query is correct before executing it. Always use this

tool before executing a query with `execute_sql_query`.

"""

return QuerySQLCheckerTool(db=sql_database, llm=language_model).run({"query": sql_query, **config})

Creating Agents

Agents are AI entities that possess specific roles, goals, and capabilities. We will create three agents: a database developer, a data analyst, and a report editor.

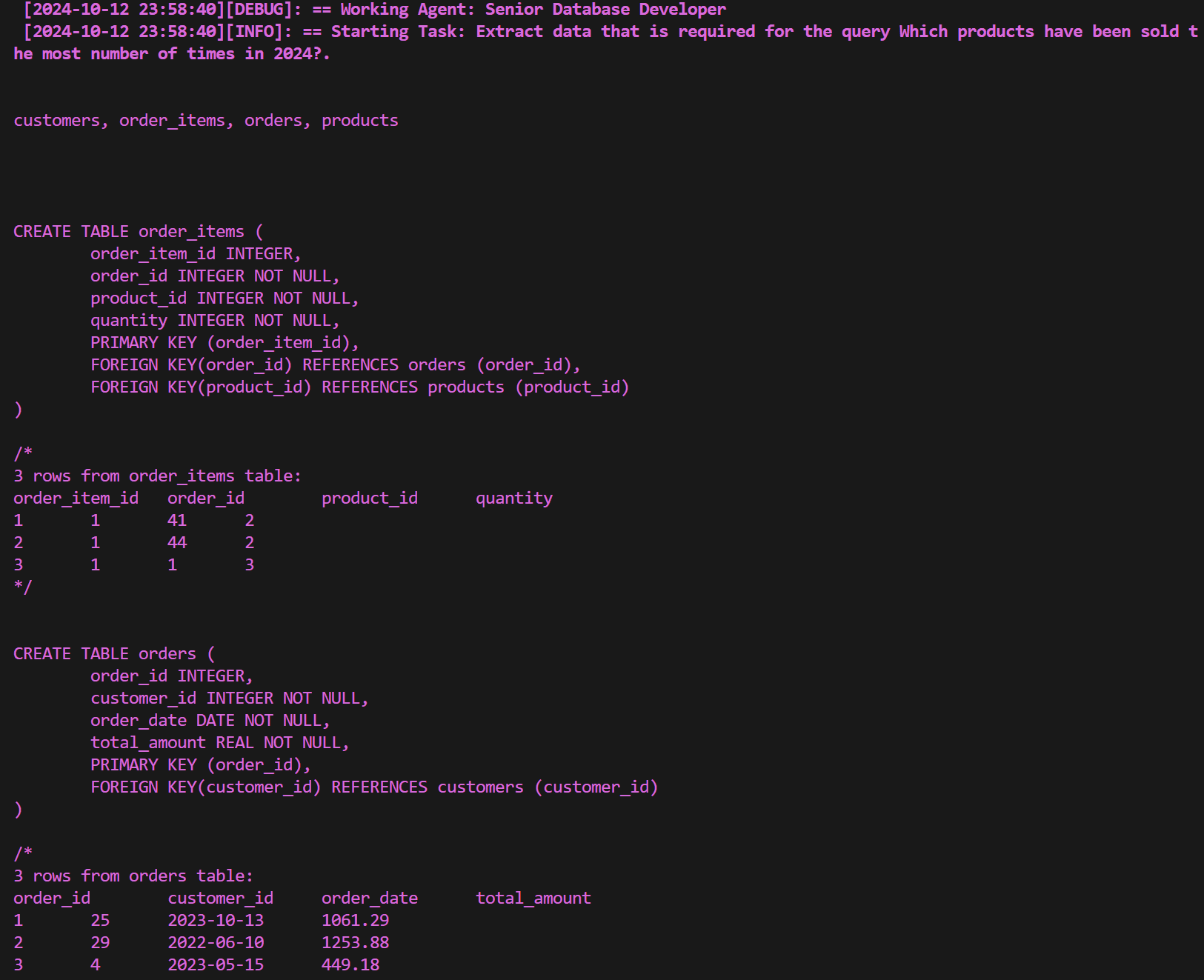

Database Developer

The database developer agent is responsible for constructing and executing SQL queries based on a given request.

database_developer = Agent(

role="Senior Database Developer",

goal="Construct and execute SQL queries based on a request",

backstory=dedent(

"""

You are an experienced database engineer who is master at creating efficient and complex SQL queries.

You have a deep understanding of how different databases work and how to optimize queries.

Use the `list_database_tables` to find available tables.

Use the `get_tables_schema` to understand the metadata for the tables.

Use the `execute_sql_query` to check your queries for correctness.

Use the `validate_sql_query` to execute queries against the database.

"""

),

llm=language_model,

tools=[list_database_tables, get_tables_schema, execute_sql_query, validate_sql_query],

allow_delegation=False,

)

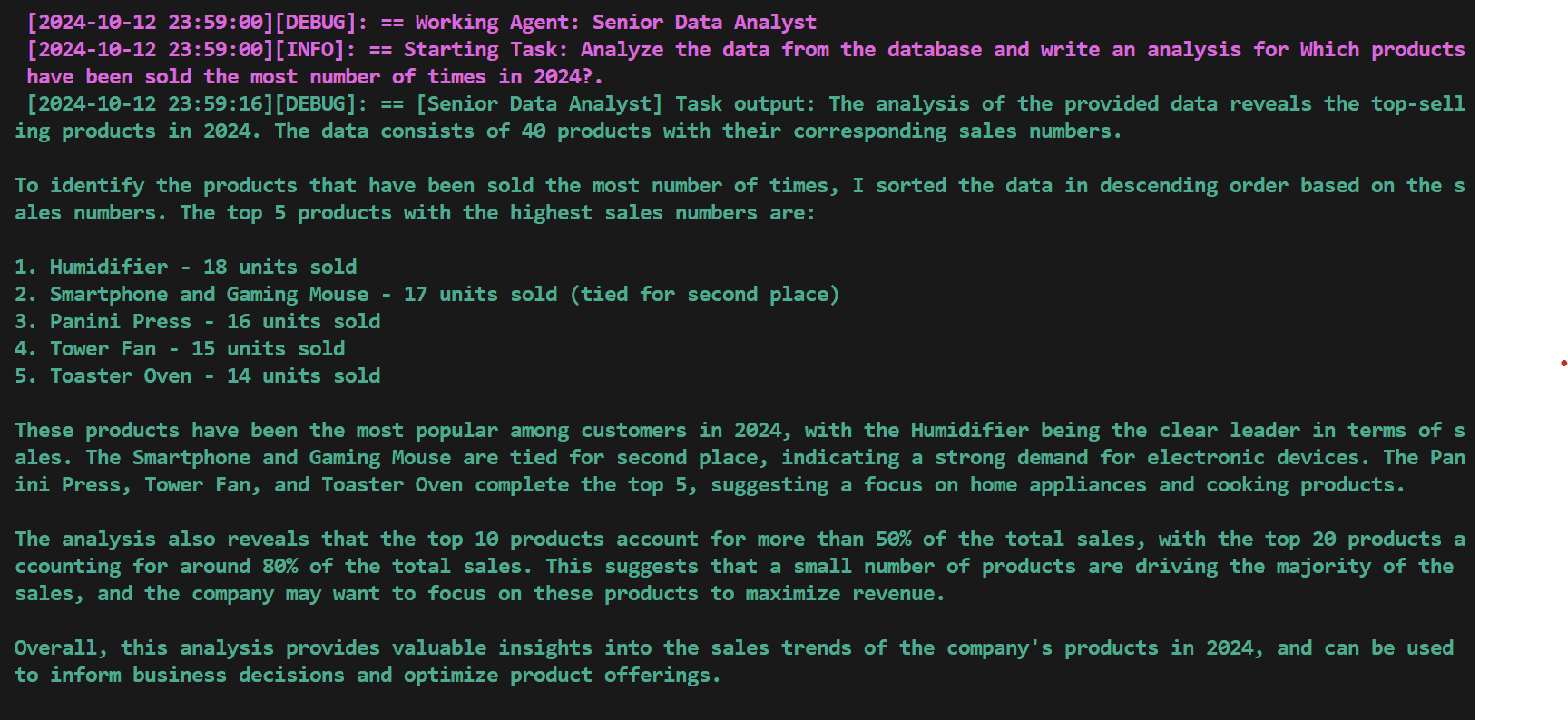

Data Analyst

The data analyst agent receives data from the database developer and performs analysis on it.

data_analyst = Agent(

role="Senior Data Analyst",

goal="You receive data from the database developer and analyze it",

backstory=dedent(

"""

You have deep experience with analyzing datasets using Python.

Your work is always based on the provided data and is clear,

easy-to-understand and to the point. You have attention

to detail and always produce very detailed work (as long as you need).

"""

),

llm=language_model,

allow_delegation=False,

)

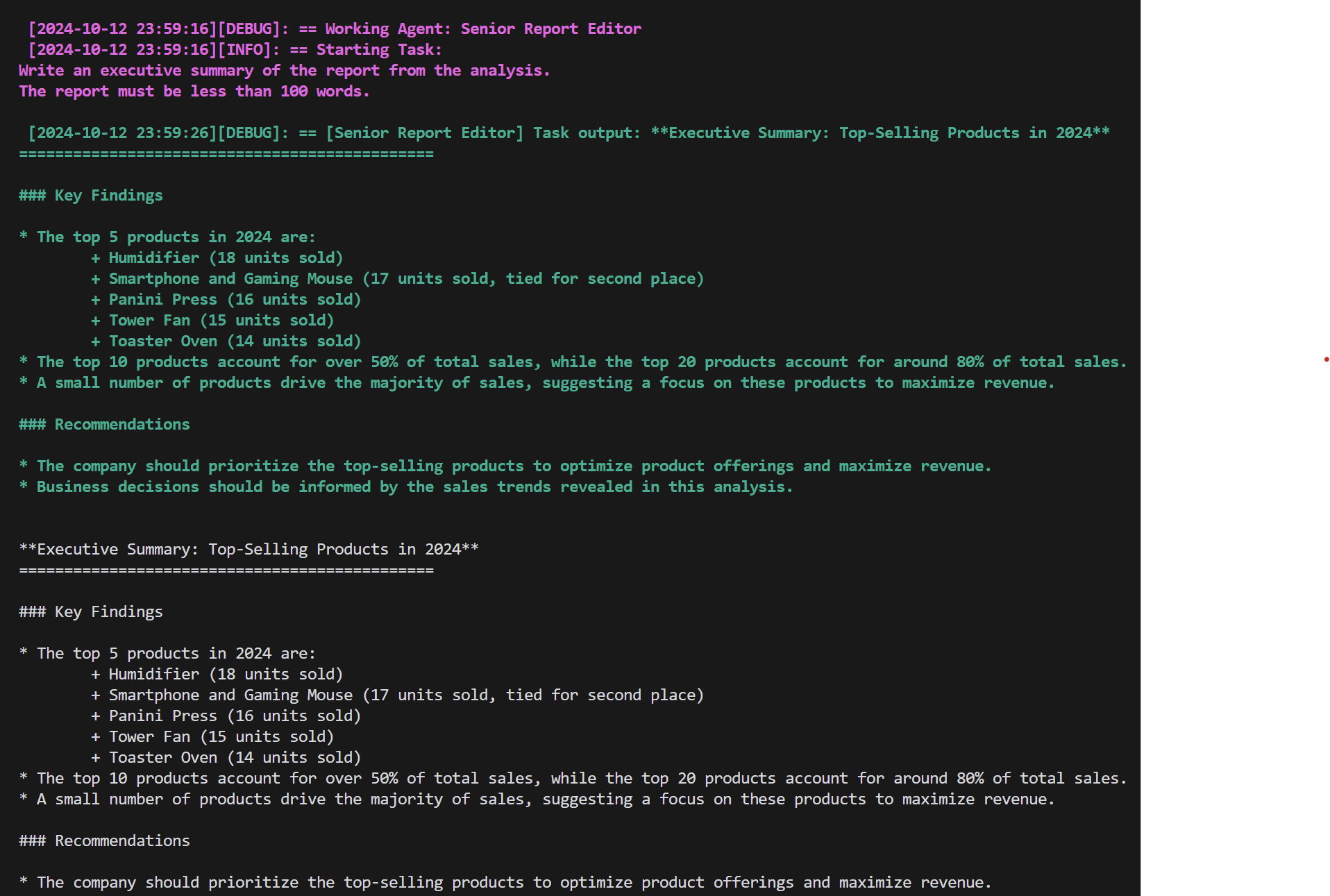

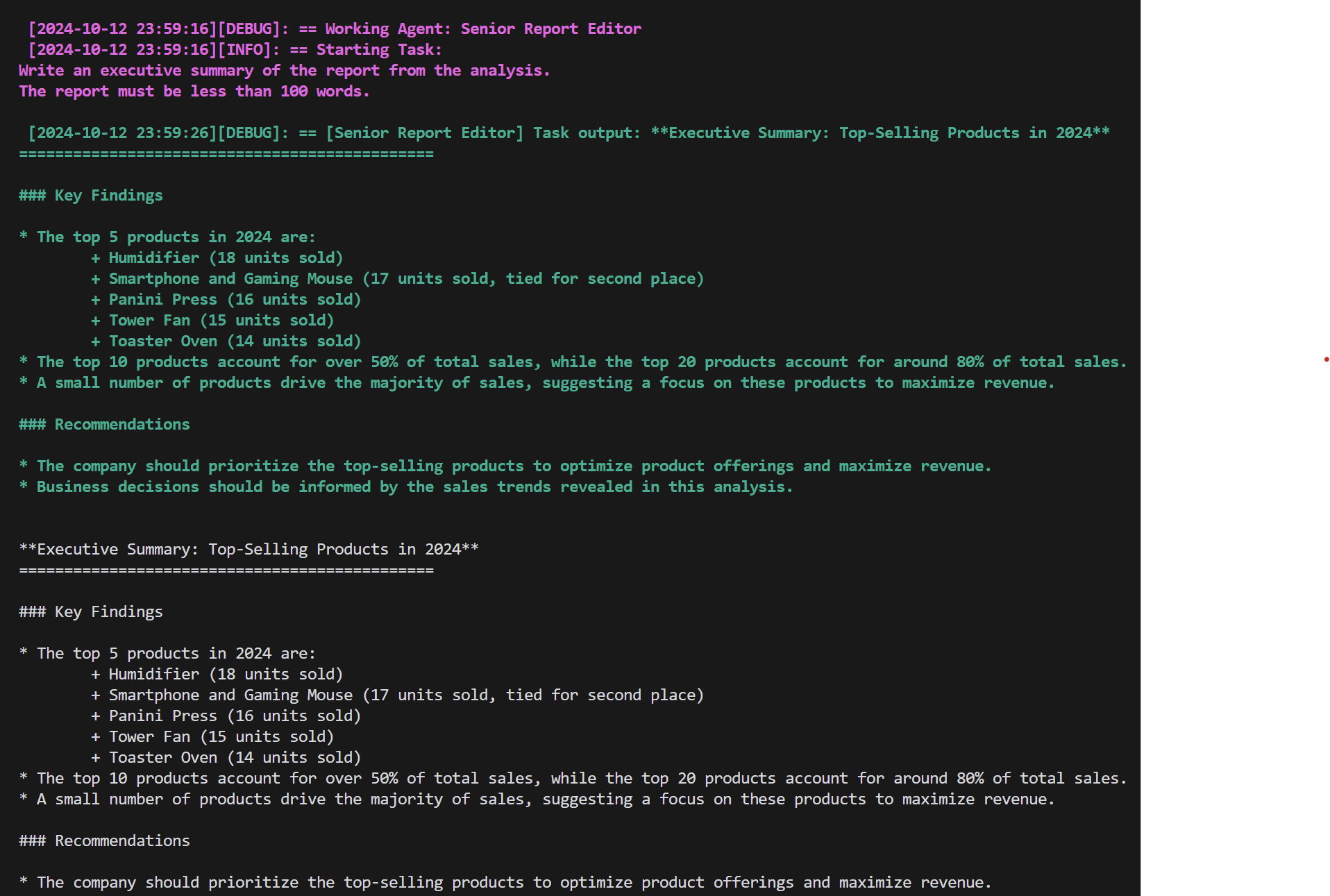

Report Editor

The report editor agent generates an executive summary based on the analysis performed by the data analyst.

report_editor = Agent(

role="Senior Report Editor",

goal="Write an executive summary type of report based on the work of the analyst",

backstory=dedent(

"""

Your writing style is well known for clear and effective communication.

You always summarize long texts into bullet points that contain the most

important details.

"""

),

llm=language_model,

allow_delegation=False,

)

Creating Tasks

Tasks define the specific actions that agents need to perform. We will create three tasks: extracting database data, analyzing the extracted data, and generating an executive summary.

Extract Database Data

The extract_database_data task instructs the database developer agent to extract the required data based on a given query.

extract_database_data = Task(

description="Extract data that is required for the query {query}.",

expected_output="Database result for the query",

agent=database_developer,

)

Analyze Extracted Data

The analyze_extracted_data task instructs the data analyst agent to analyze the data extracted by the database developer and provide a detailed analysis.

analyze_extracted_data = Task(

description="Analyze the data from the database and write an analysis for {query}.",

expected_output="Detailed analysis text",

agent=data_analyst,

context=[extract_database_data],

)

Generate Executive Summary

The generate_executive_summary task instructs the report editor agent to generate an executive summary based on the analysis performed by the data analyst.

generate_executive_summary = Task(

description=dedent(

"""

Write an executive summary of the report from the analysis.

The report must be less than 100 words.

"""

),

expected_output="Markdown report",

agent=report_editor,

context=[analyze_extracted_data],

)

Creating the Crew

A crew is a group of agents that work together to accomplish a specific goal. We will create an analysis_crew that consists of the database developer, data analyst, and report editor agents.

analysis_crew = Crew(

agents=[database_developer, data_analyst, report_editor],

tasks=[extract_database_data, analyze_extracted_data, generate_executive_summary],

process=Process.sequential,

verbose=2,

memory=False,

)

Executing the Analysis

Finally, we can execute the analysis by providing a query input to the analysis_crew and retrieving the final result.

query_input = {

"query": "Which products have been sold the most number of times in 2024?"

}

final_result = analysis_crew.kickoff(inputs=query_input)

print(final_result)

final_result will contain the executive summary generated by the report editor agent based on the analysis performed by the data analyst agent using the data extracted by the database developer agent.

Conclusion

In this tutorial, we explored how to leverage AI for data analysis in a business context using the Crewai and Langchain Python libraries. By creating agents with specific roles and capabilities, defining tasks, and assembling them into a crew, we can enable marketing and sales teams to converse with their data, uncover valuable insights, and make informed decisions.

The power of AI lies in its ability to process vast amounts of data, identify patterns, and generate meaningful insights that can drive business growth. By incorporating AI into their data analysis workflows, businesses can gain a competitive edge, optimize their operations, and deliver personalized experiences to their customers.

Remember to adapt this tutorial to your specific business needs, connect to your own databases, and customize the agents and tasks accordingly. With the right tools and approach, AI can become a valuable asset in your business's data-driven decision-making process. Happy automating!