- Published on

Data-Driven Decisions - Unleash Your CRM Data with BigQuery & Cloud Functions

In today’s fast-paced business environment, leveraging data for strategic decisions is paramount. Integrating Customer Relationship Management (CRM) systems with data analytics platforms like Google BigQuery can provide organizations with powerful insights, helping them to stay ahead in the market. This blog post explores a real-world use case of using Google Cloud Functions to fetch data from a CRM system and store it in BigQuery, focusing on the business and employee impact of this integration.

Setting Up Google Cloud Function

Prerequisites

Before diving into the integration process, ensure you have the following prerequisites:

Google Cloud Platform (GCP) Account : Set up a GCP account if you haven't already.

CRM Access : Ensure you have access to your CRM system and its API.

BigQuery Dataset : Create a dataset in BigQuery where the data will be stored.

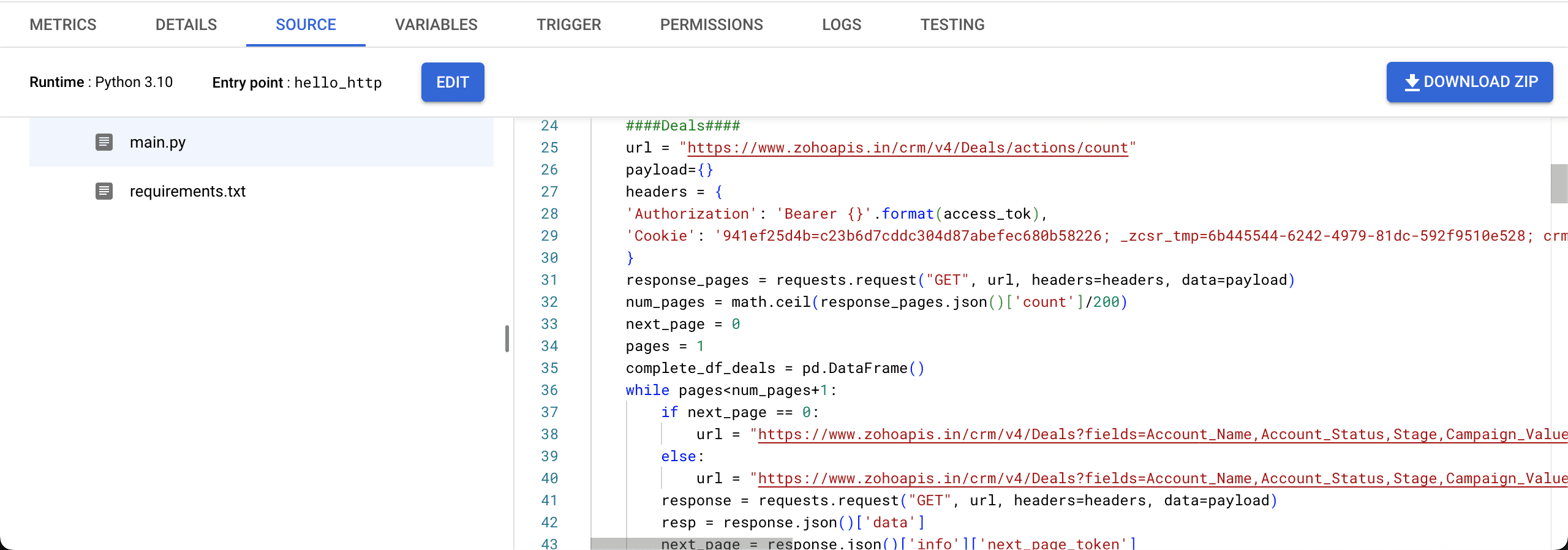

Creating the Cloud Function

Google Cloud Functions is a serverless compute service that allows you to run your code in response to events. We will create a function that fetches data from the CRM and stores it in BigQuery.

Function Code : The following Python code fetches data from Zoho CRM and uploads it to BigQuery.

import functions_framework

import math

from google.cloud import bigquery

import pandas as pd

import requests

@functions_framework.http

def crm_to_bigquery(request):

# Fetch access token

url = "https://accounts.zoho.in/oauth/v2/token"

payload = {

'client_id': 'your_client_id',

'client_secret': 'your_client_secret',

'refresh_token': 'your_refresh_token',

'grant_type': 'refresh_token'

}

response = requests.post(url, data=payload)

access_token = response.json()['access_token']

# Fetch CRM data

def fetch_crm_data(endpoint):

headers = {'Authorization': f'Bearer {access_token}'}

response = requests.get(endpoint, headers=headers)

return pd.DataFrame(response.json()['data'])

deals_df = fetch_crm_data("https://www.zohoapis.in/crm/v4/Deals")

accounts_df = fetch_crm_data("https://www.zohoapis.in/crm/v4/Accounts")

leads_df = fetch_crm_data("https://www.zohoapis.in/crm/v4/Leads")

contacts_df = fetch_crm_data("https://www.zohoapis.in/crm/v4/Contacts")

# Initialize BigQuery client

client = bigquery.Client()

dataset_id = 'your_dataset'

# Load data into BigQuery

def load_to_bigquery(df, table_id):

table_ref = client.dataset(dataset_id).table(table_id)

job_config = bigquery.LoadJobConfig(write_disposition=bigquery.WriteDisposition.WRITE_TRUNCATE)

job = client.load_table_from_dataframe(df, table_ref, job_config=job_config)

job.result()

load_to_bigquery(deals_df, 'zoho_deals')

load_to_bigquery(accounts_df, 'zoho_accounts')

load_to_bigquery(leads_df, 'zoho_leads')

load_to_bigquery(contacts_df, 'zoho_contacts')

return "Data successfully loaded into BigQuery"

Fetching Data from Zoho CRM

Authentication and Authorization

To securely fetch data from Zoho CRM, we use OAuth2.0 for authentication. The access token is obtained using client credentials and a refresh token.

url = "https://accounts.zoho.in/oauth/v2/token"

payload = {

'client_id': 'your_client_id',

'client_secret': 'your_client_secret',

'refresh_token': 'your_refresh_token',

'grant_type': 'refresh_token'

}

response = requests.post(url, data=payload)

access_token = response.json()['access_token']

Data Extraction

With the access token, we can now query Zoho CRM endpoints to fetch the required data. For example, to get deals data:

def fetch_crm_data(endpoint):

headers = {'Authorization': f'Bearer {access_token}'}

response = requests.get(endpoint, headers=headers)

return pd.DataFrame(response.json()['data'])

deals_df = fetch_crm_data("https://www.zohoapis.in/crm/v4/Deals")

Loading Data into BigQuery

Data Transformation

Before loading data into BigQuery, you might need to transform it. For instance, ensuring data types are compatible.

def transform_data(df):

# Perform any necessary transformations

return df

Uploading Data

Utilize the BigQuery client library to load data into the dataset.

def load_to_bigquery(df, table_id):

table_ref = client.dataset(dataset_id).table(table_id)

job_config = bigquery.LoadJobConfig(write_disposition=bigquery.WriteDisposition.WRITE_TRUNCATE)

job = client.load_table_from_dataframe(df, table_ref, job_config=job_config)

job.result()

Tips and Tricks

Optimize Your Queries

When working with large datasets, optimize your BigQuery queries to reduce processing time and cost. Use partitioned tables and appropriate data types to enhance performance.

Monitor and Maintain

Regularly monitor your Google Cloud Function and BigQuery jobs to ensure they run smoothly. Set up alerts for any failures or performance issues.

Security Best Practices

Ensure your data is secure by following best practices for authentication and authorization. Use service accounts with the least privilege principle and keep your credentials safe.

By following these guidelines and leveraging the power of Google Cloud Functions and BigQuery, you can transform your CRM data into actionable insights, driving business growth and efficiency. Happy automating!